Back

A Telescope, Pointed at the Stars

Thesis

We believe mechanistic understanding is the foundation for entirely new architectures, capabilities, and safety tools. Endlessly throwing tricks and compute at problems can get you to the next benchmark, but it rarely tells you why something works or how to build on it. The most powerful breakthroughs will come from deeply understanding how models work.

Acceleration

The past year has witnessed the evolution of models at breathtaking speed. The strongest example would perhaps be the emergence and ongoing development of reasoning models, with the initial release of OpenAI’s o1 and the subsequent proliferation of test-time scaling approaches (Deepseek R1, Qwen 3, S1, etc.)[1][2][3][4].

In the world of open-source, we finally have open-weight MoE models at frontier scale/quality, released by Qwen, Kimi, Deepseek, and recently OpenAI as well[5][6]. It’s an exciting time to be studying the inner workings of models, with such strong models freely available.

The past 2 years have also seen amazing progress in interpretability - particularly in scalable and causal approaches for transformer-based language models. The key bottleneck of superposition, whereby neurons do not cleanly correspond to features, was identified1, characterized, and used to motivate tools such as sparse autoencoders (SAEs)2, cross-layer transcoders (CLTs), Mixture of Linear Transforms (MOLT), and more [7][8].

Excitingly, these approaches have yielded concrete insights into the inner workings of models, with potential downstream applications. To highlight a few:

- Anthropic’s Biology of a Language Model[9]: Anthropic applied CLT’s and circuit analysis to dissect a set of behaviors in their frontier language model, such as addition. They discovered fascinating heuristics the model uses for seemingly obvious symbolic tasks, and describe how these heuristics may fail.

- Goodfire’s Mech Interp on Evo[10]: Goodfire applied SAEs to a frontier DNA foundation model. Notably, the interpretability work was performed as a part of the core technical report for the model. The team surfaced interpretable biologically plausible features in Evo 2.

- OpenAI’s Persona Features Control Emergent Misalignment[11]: By applying SAEs, OpenAI found a single feature which could be manipulated (suppressed or amplified) to precisely modulate emergent misalignment, and which could therefore be used as an oversight tool during deployment.

Lead to Gold

Despite the advances mentioned above, much of modern ML research feels akin to alchemy rather than to chemistry - relying heavily on empirical experimentation without fully grasping underlying mechanisms.

Richard Sutton famously wrote in the Bitter Lesson the following[12]:

The biggest lesson that can be read from 70 years of AI research is that general methods that leverage computation are ultimately the most effective, and by a large margin.

The Bitter Lesson has turned out mostly true. Approaches which leverage human priors and predefined strategies are consistently outperformed by leveraging data and compute at the problem. The salient example to give is OpenAI/Gemini’s recent IMO gold medal, where simple unstructured reasoning outperformed sophisticated proof solving symbolic/LLM coupled agents such as ByteDance’s SeedProver[13][14].3

However, scale alone does not adequately explain why certain ideas succeed or fail. In particular, the Bitter Lesson is not a claim that we should not do science (though many people take it as such) - it’s a claim that science should be unbiased and scalable.

This is the impetus for Tilde: to scientifically deconstruct models and use that newfound understanding to build more capable future systems - leveraging, not fighting, the Bitter Lesson.

We reject the notion of placing a hundred bets and keeping those that improve benchmark scores; our mission is to take a handful of carefully placed gambits and deeply understand why they succeed or fail. In doing so, we aim to design intelligence that pushes outside the local envelope of current strategies.

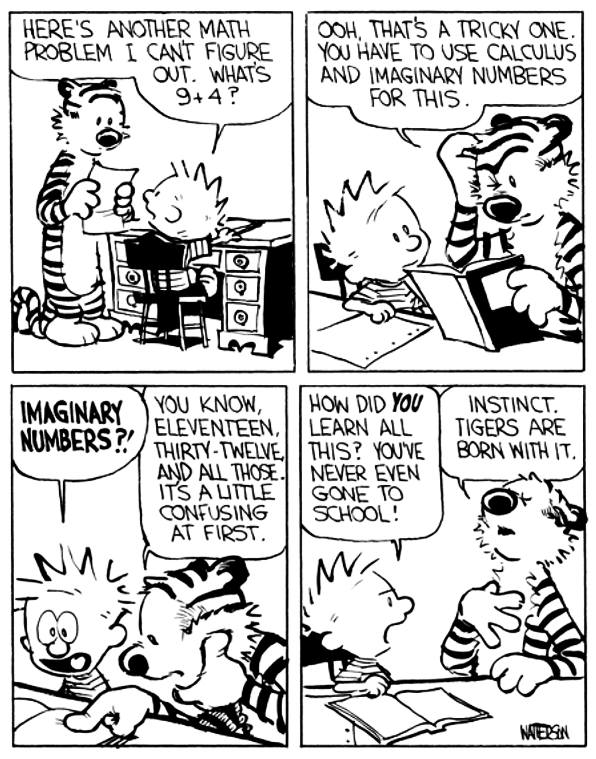

Hobbes would make a great frontier model. Credit: Calvin & Hobbes by Bill Watterson

Hobbes would make a great frontier model. Credit: Calvin & Hobbes by Bill Watterson

Inspiration

The approach we outline is not our invention, but our guiding star. The most fascinating scientific breakthroughs time-and-time again leverage mechanistic characterization and insight. For example,

- Mendeleev's Periodic Table: Mendeleev discovered the periodic table by arranging the known elements in order of increasing atomic mass and grouping them by similar chemical properties. This revealed a repeating pattern that allowed him to predict the existence and properties of undiscovered elements.

- Structural Engineering and the Akashi Kaikyo Bridge: The development of the Akashi Kaikyo bridge was possible only through advanced structural modeling and simulation software. If the engineering principles from the Brooklyn Bridge, which were inefficient and excessive, had been naively cross-applied, the Akashi Kaikyo Bridge would have taken many times more material to construct.

Moreover, pursuing progress without a firm grasp of foundational understanding is prone to failure. In the early 20th century, the Italian School of algebraic geometry made deep advances in algebraic surfaces, at first with rigor, but gradually relied on intuition and incomplete arguments. Unverified assumptions and even false results entered the literature, and uncertainty over what was truly proven stalled the field until Zariski, Weil, and others restored a rigorous foundation.

The Italian School provides a cautionary tale for modern ML: we throw many tricks at a problem, benchmark them, and keep what works. And to its credit, this bag of tricks, annealed by countless hours of iteration, is astoundingly effective at producing models that can perform at or above human levels on many useful tasks.

By contrast, there are several lines of work within the sequence modeling and architecture research literature which do exemplify the “development through understanding” approach outlined above (despite not typically being classified as “interpretability” papers). In particular:

- Fu et al.'s H3 builds on Olsson et al.'s work on induction heads by identifying a synthetic task where two state-space models underperform. This insight motivates the design of the H3 layer, which introduces induction-like mechanisms into state-space models[15].

- Zhu et. al's Transformers without Normalization identifies that layer normalization in Transformers often yields tanh-like, S-shaped activation mappings. Leveraging this insight, the authors justify removing components like LayerNorm, thereby simplifying the architecture and eliminating the need for computing activation statistics[17].

H3, in particular, relied on the deep mechanistic characterization of induction heads presented in Anthropic’s Induction Heads and ICL to design a linear time architecture capable of expressing the same capabilities on intentionally crafted synthetics[18].

The lesson is clear: reverse engineering and demystifying model mechanisms empowers us to formulate and ask questions we otherwise would not have the primitives for. As we build intelligence, the search space for principled ideas to test grows exponentially; mechanistic understanding provides the A* heuristic towards correct model design.

From 0 to ~1

Each question…

At Tilde, we’ve taken this approach to science to heart. We’ve worked on demystifying model mechanisms extensively. We began our work with sparse autoencoders, showcasing Sieve: the first counterfactually useful application of SAEs in a real-world use case[19]. The takeaway from Sieve wasn’t the success we found - but rather the deeper grasp of the nuances of applying SAEs, and in particular, when it can be better to use something else. We later put out Activault, a tool to make activation-level science almost an order of magnitude cheaper and faster[20].

We dove deep into sparse attention mechanisms, analyzing the conditions under which they outperform dense attention. We discovered a fascinating relation between grouped query attention and sparsity through visualizing the query-key geometry in sparse attention models we trained[21].

Most recently, we released MoMoE: the fastest, most memory-efficient MoE kernel for training/fine-tuning. We used this kernel extensively in some of our interpretability work on MoE models, which will be released in the coming weeks. And there’s much more to come[22].

...raises a thousand more

We are in the beautiful position of having many more questions than answers. We are actively thinking about several architectural and training choices from sequence mixers to learning dynamics in MoEs - and yet we scratch the surface of all that exists to uncover. Take SwiGLU as an example: its form has been left practically unchanged since Noam Shazeer proposed the architecture in 2020.

Noam famously wrote (on GLUs)[23]:

These architectures are simple to implement, and have no apparent computational drawbacks. We offer no explanation as to why these architectures seem to work; we attribute their success, as all else, to divine benevolence.

Years later, despite a handful of publications on the expressivity of multiplicative networks, there is no fundamental theory or explanation for SwiGLU's overwhelming inclusion and proliferation in models at scale[24][25]. SwiGLU's empirical success raises so many questions: why do some GLU variants perform better than others? How do we fit gating into the perspective and framework of associative memory? Are there generalizations of current gating mechanisms? What objective are GLU variants implicitly optimizing, i.e. does SwiGLU arise as a result fo some closed-form objective? Why do returns diminish as we extend to higher-order multiplicative interactions? And so on.

Answering these "simple" questions could yield not-so-simple advances - but simultaneously it is the simple questions that pose the most challenge.

In mathematics, we can define succinctly the iterated function map that leads to an intricate fractal or chaotic dynamical system[28] in a handful of symbols, and still be awestruck by its infinitude and unpredictability. Similarly, we may appreciate the beauty of neural networks while never grasping their totality.

There's a probable world where we will never grasp how intelligence forms and executes exactly, and we are forced to hill-climb on mean field/reductionist approximations or case-by-case studies. And (concerningly for some), the unknown may shrink at a rate exponential in the resources we invest.

Even in this world, we still believe studying model mechanisms is the most principled and fastest path to capitalizing on the intelligence we do develop. The coarse insights we painstakingly harvest inspire novel mechanisms and approaches for building more capable models. And ultimately there is no greater pursuit than understanding - not as a means to an end but as an end in and of itself!

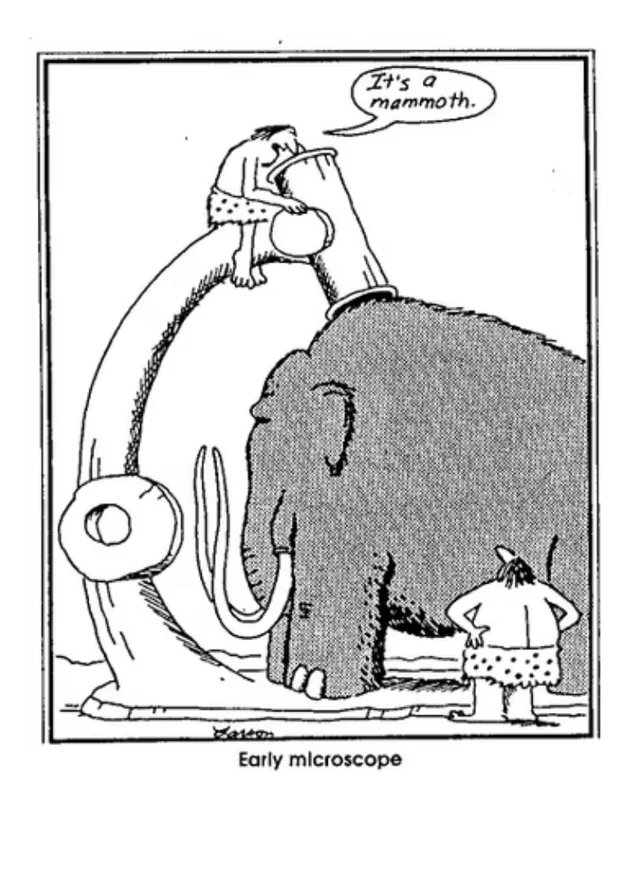

Credit: The Far Side by Gary Larson

Credit: The Far Side by Gary Larson

For Science!

One of our core values at Tilde is relentless, unforgiving intellectual honesty. It should be completely clear how we achieved stated outcomes, ran evaluations, the nature of our specific contributions, and the foundation of others’ work we built upon. For example, Kimi’s K2 openly acknowledges the decision to inherit the DeepSeek v3 architecture despite the opportunity to differentiate itself. They do so for a completely principled reason: none of the architectures they had experimented with could truly outperform v3’s architecture. Kimi could easily have delayed launching their model, kept it closed-source, obfuscated training details, or claimed that the performance boost was due to a small architectural innovation. Especially given the current climate, we view their honesty as a very admirable decision. In a similar vein, open source science and scientific accountability are central to our mission at Tilde.

We aim for all our technical releases to be directly reproducible, actionable, and intentionally crafted. Not only because transparency accelerates collective progress, but also because we feel it directly strengthens our own work. By openly sharing not just conclusions but methods, insights, and even negative results, we aim to invite clear and constructive feedback from the community. Open source science encourages a higher standard of quality and faster progress than building in isolation.

After all, our work would simply not be possible without the public contributions and insights of many talented teams: academia, Anthropic’s Interpretability Team, DeepMind’s Paradigms of Intelligence Team, Meta FAIR, FLA Org, Physics of LM etc. the list is quite long. Moving forward, we reiterate our commitment to scientific accountability and OSS, as well as active engagement with the research community. We hope to contribute back even a fraction of what we've gained.

Good science should be cheap and accessible.

Closing

At Tilde, we are driven to deeply understand existing models and use that insight to derive fundamental improvements that would be difficult to achieve otherwise. The future of intelligent systems is profoundly exciting, and we’re eager to shape it together. If you obsess over these questions, we’re actively hiring founding members of technical staff and invite you to apply.

For academia/independent researchers/small research groups: Our technical releases have a strong emphasis on making science cheaper, faster, and accessible at scale, thereby expanding the surface area of problems that can be worked on. As such, we routinely collaborate with brilliant researchers to develop tools and joint efforts on understanding model intelligence that wouldn’t be possible otherwise. If that sounds intriguing, please reach out.

Acknowledgements

We would like to acknowledge the gracious feedback from Chris Potts, Yury Polanskiy, Ken Liu, Quinn McIntyre, Mason Wang, and members of the Tilde technical staff. In particular, last week Chris independently put out a nice piece on this flavor of interpretability work: Assessing skeptical views of interpretability research.

References

- Jaech, Aaron and Kalai, Adam and Lerer, Adam and Richardson, Adam and El-Kishky, Ahmed and Low, Aiden and Helyar, Alec and Madry, Aleksander and Beutel, Alex and Carney, Alex and others (2024).

- Guo, Daya and Yang, Dejian and Zhang, Haowei and Song, Junxiao and Zhang, Ruoyu and Xu, Runxin and Zhu, Qihao and Ma, Shirong and Wang, Peiyi and Bi, Xiao and others (2025).

- Yang, An and Li, Anfeng and Yang, Baosong and Zhang, Beichen and Hui, Binyuan and Zheng, Bo and Yu, Bowen and Gao, Chang and Huang, Chengen and Lv, Chenxu and others (2025).

- Muennighoff, Niklas and Yang, Zitong and Shi, Weijia and Li, Xiang Lisa and Fei-Fei, Li and Hajishirzi, Hannaneh and Zettlemoyer, Luke and Liang, Percy and Candès, Emmanuel and Hashimoto, Tatsunori (2025).

- Martinovic, Zoran and Li, Zhuohan and Sun, Zhiqing and Johnson, Zach and Yang, Yu and Bai, Yu and Song, Yang and Wang, Xin and others (2025).

- Bricken, Trenton and Templeton, Adly and Batson, Joshua and Chen, Brian and Jermyn, Adam and Conerly, Tom and Turner, Nick and Anil, Cem and Denison, Carson and Askell, Amanda and Lasenby, Robert and Wu, Yifan and Kravec, Shauna and Schiefer, Nicholas and Maxwell, Tim and Joseph, Nicholas and Hatfield-Dodds, Zac and Tamkin, Alex and Nguyen, Karina and McLean, Brayden and Burke, Josiah E and Hume, Tristan and Carter, Shan and Henighan, Tom and Olah, Christopher (2023).

- Kamath, Harish and Ameisen, Emmanuel and Kauvar, Isaac and Luger, Rodrigo and Gurnee, Wes and Pearce, Adam and Zimmerman, Sam and Batson, Joshua and Conerly, Thomas and Olah, Chris and Lindsey, Jack (2025).

- Lindsey, Jack and Gurnee, Wes and Ameisen, Emmanuel and Chen, Brian and Pearce, Adam and Turner, Nicholas L. and Citro, Craig and Abrahams, David and Carter, Shan and Hosmer, Basil and Marcus, Jonathan and Sklar, Michael and Templeton, Adly and Bricken, Trenton and McDougall, Callum and Cunningham, Hoagy and Henighan, Thomas and Jermyn, Adam and Jones, Andy and Persic, Andrew and Qi, Zhenyi and Thompson, T. Ben and Zimmerman, Sam and Rivoire, Kelley and Conerly, Thomas and Olah, Chris and Batson, Joshua (2025).

- Deng, M. and Balsam, D. and Gorton, L. and Wang, N. and Nguyen, N. and Ho, E. and McGrath, T. (2025).

- Wang, Miles and la Tour, Tom Dupré and Watkins, Olivia and Makelov, Alex and Chi, Ryan A. and Miserendino, Samuel and Heidecke, Johannes and Patwardhan, Tejal and Mossing, Dan (2025).

- Sutton, Richard (2019).

- Luong, Thang and Lockhart, Edward (2025).

- Chen, Luoxin and Gu, Jinming and Huang, Liankai and Huang, Wenhao and Jiang, Zhicheng and Jie, Allan and Jin, Xiaoran and Jin, Xing and Li, Chenggang and Ma, Kaijing and more (2025).

- Fu, Daniel Y. and Dao, Tri and Saab, Khaled K. and Thomas, Armin W. and Rudra, Atri and Ré, Christopher (2023).

- Elhoushi, Mostafa and Shrivastava, Akshat and Liskovich, Diana and Hosmer, Basil and Wasti, Bram and Lai, Liangzhen and Mahmoud, Anas and Acun, Bilge and Agarwal, Saurabh and Roman, Ahmed and Aly, Ahmed and Chen, Beidi and Wu, Carole-Jean (2024).

- Zhu, Jiachen and Chen, Xinlei and He, Kaiming and LeCun, Yann and Liu, Zhuang (2025).

- Olsson, Catherine and Elhage, Nelson and Nanda, Neel and Joseph, Nicholas and DasSarma, Nova and Henighan, Tom and Mann, Ben and Askell, Amanda and Bai, Yuntao and Chen, Anna and Conerly, Tom and Drain, Dawn and Ganguli, Deep and Hatfield-Dodds, Zac and Hernandez, Danny and Johnston, Scott and Jones, Andy and Kernion, Jackson and Lovitt, Liane and Ndousse, Kamal and Amodei, Dario and Brown, Tom and Clark, Jack and Kaplan, Jared and McCandlish, Sam and Olah, Chris (2022).

- Karvonen, Adam and Pai, Dhruv and Wang, Mason and Keigwin, Ben (2024).

- Wang, Mason and Pai, Dhruv and Keigwin, Ben (2025).

- Pai, Dhruv and Averbuch, Timor and Wang, Mason and Keigwin, Ben (2025).

- Costin, Bobby and Averbuch, Timor and Pai, Dhruv and Chen, Nathan and Keigwin, Ben (2025).

- Shazeer, Noam (2020).

- Fiat, Jonathan and Malach, Eran and Shalev-Shwartz, Shai (2019).

- Jayakumar, Siddhant M. and Czarnecki, Wojciech M. and Menick, Jacob and Schwarz, Jonathan and Rae, Jack and Osindero, Simon and Teh, Yee Whye and Harley, Tim and Pascanu, Razvan (2020).

- Smolensky, Paul (1986).

- Ng, Andrew (UFLDL/CS294A Course Notes, 2011).

- Geshkovski, Borjan and Letrouit, Cyril and Polyanskiy, Yury and Rigollet, Philippe (2025).

Footnotes

-

This phenomenon was first identified in 1986 by Smolensky[26], though Anthropic validated superposition in transformer models. ↩

-

Sparse autoencoders have existed for some decades[27], but this was the first application to activation-level interpretability. ↩

-

In fairness, it’s difficult to attribute exactly the success to scaling reasoning versus prompt optimization/context engineering techniques leveraging human overseers. ↩